Percolate queries are also known as Persistent queries, Prospective search, document routing, search in reverse, and inverse search.

The traditional way of conducting searches involves storing documents and performing search queries against them. However, there are cases where we want to apply a query to a newly incoming document to signal a match. Some scenarios where this is desired include monitoring systems that collect data and notify users about specific events, such as reaching a certain threshold for a metric or a particular value appearing in the monitored data. Another example is news aggregation, where users may want to be notified only about certain categories or topics, or even specific "keywords."

In these situations, traditional search is not the best fit, as it assumes the desired search is performed over the entire collection. This process gets multiplied by the number of users, resulting in many queries running over the entire collection, which can cause significant additional load. The alternative approach described in this section involves storing the queries instead and testing them against an incoming new document or a batch of documents.

Google Alerts, AlertHN, Bloomberg Terminal, and other systems that allow users to subscribe to specific content utilize similar technology.

- See percolate for information on creating a PQ table.

- See Adding rules to a percolate table to learn how to add percolate rules (also known as PQ rules). Here's a quick example:

The key thing to remember about percolate queries is that your search queries are already in the table. What you need to provide are documents to check if any of them match any of the stored rules.

You can perform a percolate query via SQL or JSON interfaces, as well as using programming language clients. The SQL approach offers more flexibility, while the HTTP method is simpler and provides most of what you need. The table below can help you understand the differences.

| Desired Behavior | SQL | HTTP |

|---|---|---|

| Provide a single document | CALL PQ('tbl', '{doc1}') |

query.percolate.document{doc1} |

| Provide a single document (alternative) | CALL PQ('tbl', 'doc1', 0 as docs_json) |

- |

| Provide multiple documents | CALL PQ('tbl', ('doc1', 'doc2'), 0 as docs_json) |

- |

| Provide multiple documents (alternative) | CALL PQ('tbl', ('{doc1}', '{doc2}')) |

- |

| Provide multiple documents (alternative) | CALL PQ('tbl', '[{doc1}, {doc2}]') |

- |

| Return matching document ids | 0/1 as docs (disabled by default) | Enabled by default |

| Use document's own id to show in the result | 'id field' as docs_id (disabled by default) | Not available |

| Consider input documents are JSON | 1 as docs_json (1 by default) | Enabled by default |

| Consider input documents are plain text | 0 as docs_json (1 by default) | Not available |

| Sparsed distribution mode | default | default |

| Sharded distribution mode | sharded as mode | Not available |

| Return all info about matching query | 1 as query (0 by default) | Enabled by default |

| Skip invalid JSON | 1 as skip_bad_json (0 by default) | Not available |

| Extended info in SHOW META | 1 as verbose (0 by default) | Not available |

| Define the number which will be added to document ids if no docs_id fields provided (mostly relevant in distributed PQ modes) | 1 as shift (0 by default) | Not available |

To demonstrate how this works, here are a few examples. Let's create a PQ table with two fields:

- title (text)

- color (string)

and three rules in it:

- Just full-text. Query:

@title bag - Full-text and filtering. Query:

@title shoes. Filters:color='red' - Full-text and more complex filtering. Query:

@title shoes. Filters:color IN('blue', 'green')

- SQL

- JSON

- PHP

- Python

- Python-asyncio

- javascript

- Java

- C#

- Rust

- TypeScript

- Go

CREATE TABLE products(title text, color string) type='pq';

INSERT INTO products(query) values('@title bag');

INSERT INTO products(query,filters) values('@title shoes', 'color=\'red\'');

INSERT INTO products(query,filters) values('@title shoes', 'color in (\'blue\', \'green\')');

select * from products;PUT /pq/products/doc/

{

"query": {

"match": {

"title": "bag"

}

},

"filters": ""

}

PUT /pq/products/doc/

{

"query": {

"match": {

"title": "shoes"

}

},

"filters": "color='red'"

}

PUT /pq/products/doc/

{

"query": {

"match": {

"title": "shoes"

}

},

"filters": "color IN ('blue', 'green')"

}$index = [

'table' => 'products',

'body' => [

'columns' => [

'title' => ['type' => 'text'],

'color' => ['type' => 'string']

],

'settings' => [

'type' => 'pq'

]

]

];

$client->indices()->create($index);

$query = [

'table' => 'products',

'body' => [ 'query'=>['match'=>['title'=>'bag']]]

];

$client->pq()->doc($query);

$query = [

'table' => 'products',

'body' => [ 'query'=>['match'=>['title'=>'shoes']],'filters'=>"color='red'"]

];

$client->pq()->doc($query);

$query = [

'table' => 'products',

'body' => [ 'query'=>['match'=>['title'=>'shoes']],'filters'=>"color IN ('blue', 'green')"]

];

$client->pq()->doc($query);utilsApi.sql('create table products(title text, color string) type=\'pq\'')

indexApi.insert({"table" : "products", "doc" : {"query" : "@title bag" }})

indexApi.insert({"table" : "products", "doc" : {"query" : "@title shoes", "filters": "color='red'" }})

indexApi.insert({"table" : "products", "doc" : {"query" : "@title shoes","filters": "color IN ('blue', 'green')" }})await utilsApi.sql('create table products(title text, color string) type=\'pq\'')

await indexApi.insert({"table" : "products", "doc" : {"query" : "@title bag" }})

await indexApi.insert({"table" : "products", "doc" : {"query" : "@title shoes", "filters": "color='red'" }})

await indexApi.insert({"table" : "products", "doc" : {"query" : "@title shoes","filters": "color IN ('blue', 'green')" }})res = await utilsApi.sql('create table products(title text, color string) type=\'pq\'');

res = indexApi.insert({"table" : "products", "doc" : {"query" : "@title bag" }});

res = indexApi.insert({"table" : "products", "doc" : {"query" : "@title shoes", "filters": "color='red'" }});

res = indexApi.insert({"table" : "products", "doc" : {"query" : "@title shoes","filters": "color IN ('blue', 'green')" }});utilsApi.sql("create table products(title text, color string) type='pq'", true);

doc = new HashMap<String,Object>(){{

put("query", "@title bag");

}};

newdoc = new InsertDocumentRequest();

newdoc.index("products").setDoc(doc);

indexApi.insert(newdoc);

doc = new HashMap<String,Object>(){{

put("query", "@title shoes");

put("filters", "color='red'");

}};

newdoc = new InsertDocumentRequest();

newdoc.index("products").setDoc(doc);

indexApi.insert(newdoc);

doc = new HashMap<String,Object>(){{

put("query", "@title shoes");

put("filters", "color IN ('blue', 'green')");

}};

newdoc = new InsertDocumentRequest();

newdoc.index("products").setDoc(doc);

indexApi.insert(newdoc);utilsApi.Sql("create table products(title text, color string) type='pq'", true);

Dictionary<string, Object> doc = new Dictionary<string, Object>();

doc.Add("query", "@title bag");

InsertDocumentRequest newdoc = new InsertDocumentRequest(index: "products", doc: doc);

indexApi.Insert(newdoc);

doc = new Dictionary<string, Object>();

doc.Add("query", "@title shoes");

doc.Add("filters", "color='red'");

newdoc = new InsertDocumentRequest(index: "products", doc: doc);

indexApi.Insert(newdoc);

doc = new Dictionary<string, Object>();

doc.Add("query", "@title bag");

doc.Add("filters", "color IN ('blue', 'green')");

newdoc = new InsertDocumentRequest(index: "products", doc: doc);

indexApi.Insert(newdoc);utils_api.sql("create table products(title text, color string) type='pq'", Some(true)).await;

let mut doc1 = HashMap::new();

doc1.insert("query".to_string(), serde_json::json!("@title bag"));

let insert_req1 = InsertDocumentRequest::new("products".to_string(), serde_json::json!(doc1));

index_api.insert(insert_req1).await;

let mut doc2 = HashMap::new();

doc2.insert("query".to_string(), serde_json::json!("@title shoes"));

doc2.insert("filters".to_string(), serde_json::json!("color='red'"));

let insert_req2 = InsertDocumentRequest::new("products".to_string(), serde_json::json!(doc2));

index_api.insert(insert_req2).await;

let mut doc3 = HashMap::new();

doc3.insert("query".to_string(), serde_json::json!("@title bag"));

doc3.insert("filters".to_string(), serde_json::json!("color IN ('blue', 'green')"));

let insert_req3 = InsertDocumentRequest::new("products".to_string(), serde_json::json!(doc3));

index_api.insert(insert_req3).await;res = await utilsApi.sql("create table test_pq(title text, color string) type='pq'");

res = indexApi.insert({

index: 'test_pq',

doc: { query : '@title bag' }

});

res = indexApi.insert(

index: 'test_pq',

doc: { query: '@title shoes', filters: "color='red'" }

});

res = indexApi.insert({

index: 'test_pq',

doc: { query : '@title shoes', filters: "color IN ('blue', 'green')" }

});apiClient.UtilsAPI.Sql(context.Background()).Body("create table test_pq(title text, color string) type='pq'").Execute()

indexDoc := map[string]interface{} {"query": "@title bag"}

indexReq := manticoreclient.NewInsertDocumentRequest("test_pq", indexDoc)

apiClient.IndexAPI.Insert(context.Background()).InsertDocumentRequest(*indexReq).Execute();

indexDoc = map[string]interface{} {"query": "@title shoes", "filters": "color='red'"}

indexReq = manticoreclient.NewInsertDocumentRequest("test_pq", indexDoc)

apiClient.IndexAPI.Insert(context.Background()).InsertDocumentRequest(*indexReq).Execute();

indexDoc = map[string]interface{} {"query": "@title shoes", "filters": "color IN ('blue', 'green')"}

indexReq = manticoreclient.NewInsertDocumentRequest("test_pq", indexDoc)

apiClient.IndexAPI.Insert(context.Background()).InsertDocumentRequest(*indexReq).Execute();+---------------------+--------------+------+---------------------------+

| id | query | tags | filters |

+---------------------+--------------+------+---------------------------+

| 1657852401006149635 | @title shoes | | color IN ('blue, 'green') |

| 1657852401006149636 | @title shoes | | color='red' |

| 1657852401006149637 | @title bag | | |

+---------------------+--------------+------+---------------------------+{

"table": "products",

"type": "doc",

"_id": 1657852401006149661,

"result": "created"

}

{

"table": "products",

"type": "doc",

"_id": 1657852401006149662,

"result": "created"

}

{

"table": "products",

"type": "doc",

"_id": 1657852401006149663,

"result": "created"

}Array(

[table] => products

[type] => doc

[_id] => 1657852401006149661

[result] => created

)

Array(

[table] => products

[type] => doc

[_id] => 1657852401006149662

[result] => created

)

Array(

[table] => products

[type] => doc

[_id] => 1657852401006149663

[result] => created

){'created': True,

'found': None,

'id': 0,

'table': 'products',

'result': 'created'}

{'created': True,

'found': None,

'id': 0,

'table': 'products',

'result': 'created'}

{'created': True,

'found': None,

'id': 0,

'table': 'products',

'result': 'created'}{'created': True,

'found': None,

'id': 0,

'table': 'products',

'result': 'created'}

{'created': True,

'found': None,

'id': 0,

'table': 'products',

'result': 'created'}

{'created': True,

'found': None,

'id': 0,

'table': 'products',

'result': 'created'}"table":"products","_id":0,"created":true,"result":"created"}

{"table":"products","_id":0,"created":true,"result":"created"}

{"table":"products","_id":0,"created":true,"result":"created"}{total=0, error=, warning=}

class SuccessResponse {

index: products

id: 0

created: true

result: created

found: null

}

class SuccessResponse {

index: products

id: 0

created: true

result: created

found: null

}

class SuccessResponse {

index: products

id: 0

created: true

result: created

found: null

}{total=0, error="", warning=""}

class SuccessResponse {

index: products

id: 0

created: true

result: created

found: null

}

class SuccessResponse {

index: products

id: 0

created: true

result: created

found: null

}

class SuccessResponse {

index: products

id: 0

created: true

result: created

found: null

}{total=0, error="", warning=""}

class SuccessResponse {

index: products

id: 0

created: true

result: created

found: null

}

class SuccessResponse {

index: products

id: 0

created: true

result: created

found: null

}

class SuccessResponse {

index: products

id: 0

created: true

result: created

found: null

}{

"table":"test_pq",

"_id":1657852401006149661,

"created":true,

"result":"created"

}

{

"table":"test_pq",

"_id":1657852401006149662,

"created":true,

"result":"created"

}

{

"table":"test_pq",

"_id":1657852401006149663,

"created":true,

"result":"created"

}{

"table":"test_pq",

"_id":1657852401006149661,

"created":true,

"result":"created"

}

{

"table":"test_pq",

"_id":1657852401006149662,

"created":true,

"result":"created"

}

{

"table":"test_pq",

"_id":1657852401006149663,

"created":true,

"result":"created"

}The first document doesn't match any rules. It could match the first two, but they require additional filters.

The second document matches one rule. Note that CALL PQ by default expects a document to be a JSON, but if you use 0 as docs_json, you can pass a plain string instead.

- SQL

- JSON

- PHP

- Python

- Python-asyncio

- javascript

- Java

- C#

- Rust

- TypeScript

- Go

CALL PQ('products', 'Beautiful shoes', 0 as docs_json);

CALL PQ('products', 'What a nice bag', 0 as docs_json);

CALL PQ('products', '{"title": "What a nice bag"}');POST /pq/products/search

{

"query": {

"percolate": {

"document": {

"title": "What a nice bag"

}

}

}

}$percolate = [

'table' => 'products',

'body' => [

'query' => [

'percolate' => [

'document' => [

'title' => 'What a nice bag'

]

]

]

]

];

$client->pq()->search($percolate);searchApi.percolate('products',{"query":{"percolate":{"document":{"title":"What a nice bag"}}}})await searchApi.percolate('products',{"query":{"percolate":{"document":{"title":"What a nice bag"}}}})res = await searchApi.percolate('products',{"query":{"percolate":{"document":{"title":"What a nice bag"}}}});PercolateRequest percolateRequest = new PercolateRequest();

query = new HashMap<String,Object>(){{

put("percolate",new HashMap<String,Object >(){{

put("document", new HashMap<String,Object >(){{

put("title","what a nice bag");

}});

}});

}};

percolateRequest.query(query);

searchApi.percolate("test_pq",percolateRequest);Dictionary<string, Object> percolateDoc = new Dictionary<string, Object>();

percolateDoc.Add("document", new Dictionary<string, Object> {{ "title", "what a nice bag" }});

Dictionary<string, Object> query = new Dictionary<string, Object> {{ "percolate", percolateDoc }};

PercolateRequest percolateRequest = new PercolateRequest(query=query);

searchApi.Percolate("test_pq",percolateRequest);let mut percolate_doc_fields = HashMap::new();

percolate_doc_fileds.insert("title".to_string(), "what a nice bag");

let mut percolate_doc = HashMap::new();

percolate_doc.insert("document".to_string(), percolate_doc_fields);

let percolate_query = PercolateRequestQuery::new(serde_json::json!(percolate_doc));

let percolate_req = PercolateRequest::new(percolate_query);

search_api.percolate("test_pq", percolate_req).await;res = await searchApi.percolate('test_pq', { query: { percolate: { document : { title : 'What a nice bag' } } } } );query := map[string]interface{} {"title": "what a nice bag"}

percolateRequestQuery := manticoreclient.NewPercolateQuery(query)

percolateRequest := manticoreclient.NewPercolateRequest(percolateRequestQuery)

res, _, _ := apiClient.SearchAPI.Percolate(context.Background(), "test_pq").PercolateRequest(*percolateRequest).Execute()+---------------------+

| id |

+---------------------+

| 1657852401006149637 |

+---------------------+

+---------------------+

| id |

+---------------------+

| 1657852401006149637 |

+---------------------+{

"took": 0,

"timed_out": false,

"hits": {

"total": 1,

"max_score": 1,

"hits": [

{

"table": "products",

"_type": "doc",

"_id": 1657852401006149644,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}Array

(

[took] => 0

[timed_out] =>

[hits] => Array

(

[total] => 1

[max_score] => 1

[hits] => Array

(

[0] => Array

(

[_index] => products

[_type] => doc

[_id] => 1657852401006149644

[_score] => 1

[_source] => Array

(

[query] => Array

(

[match] => Array

(

[title] => bag

)

)

)

[fields] => Array

(

[_percolator_document_slot] => Array

(

[0] => 1

)

)

)

)

)

){'hits': {'hits': [{u'_id': u'2811025403043381480',

u'table': u'products',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'@title bag'}},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}}],

'total': 1},

'profile': None,

'timed_out': False,

'took': 0}{'hits': {'hits': [{u'_id': u'2811025403043381480',

u'table': u'products',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'@title bag'}},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}}],

'total': 1},

'profile': None,

'timed_out': False,

'took': 0}{

"took": 0,

"timed_out": false,

"hits": {

"total": 1,

"hits": [

{

"table": "products",

"_type": "doc",

"_id": 2811045522851233808,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 1

maxScore: 1

hits: [{_index=products, _type=doc, _id=2811045522851234109, _score=1, _source={query={ql=@title bag}}, fields={_percolator_document_slot=[1]}}]

aggregations: null

}

profile: null

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 1

maxScore: 1

hits: [{_index=products, _type=doc, _id=2811045522851234109, _score=1, _source={query={ql=@title bag}}, fields={_percolator_document_slot=[1]}}]

aggregations: null

}

profile: null

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 1

maxScore: 1

hits: [{_index=products, _type=doc, _id=2811045522851234109, _score=1, _source={query={ql=@title bag}}, fields={_percolator_document_slot=[1]}}]

aggregations: null

}

profile: null

}{

"took": 0,

"timed_out": false,

"hits": {

"total": 1,

"hits": [

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149661,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}{

"took": 0,

"timed_out": false,

"hits": {

"total": 1,

"hits": [

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149661,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}- SQL

- JSON

- PHP

- Python

- Python-asyncio

- javascript

- Java

- C#

- Rust

- TypeScript

- Go

CALL PQ('products', '{"title": "What a nice bag"}', 1 as query);POST /pq/products/search

{

"query": {

"percolate": {

"document": {

"title": "What a nice bag"

}

}

}

}$percolate = [

'table' => 'products',

'body' => [

'query' => [

'percolate' => [

'document' => [

'title' => 'What a nice bag'

]

]

]

]

];

$client->pq()->search($percolate);searchApi.percolate('products',{"query":{"percolate":{"document":{"title":"What a nice bag"}}}})await searchApi.percolate('products',{"query":{"percolate":{"document":{"title":"What a nice bag"}}}})res = await searchApi.percolate('products',{"query":{"percolate":{"document":{"title":"What a nice bag"}}}});PercolateRequest percolateRequest = new PercolateRequest();

query = new HashMap<String,Object>(){{

put("percolate",new HashMap<String,Object >(){{

put("document", new HashMap<String,Object >(){{

put("title","what a nice bag");

}});

}});

}};

percolateRequest.query(query);

searchApi.percolate("test_pq",percolateRequest);Dictionary<string, Object> percolateDoc = new Dictionary<string, Object>();

percolateDoc.Add("document", new Dictionary<string, Object> {{ "title", "what a nice bag" }});

Dictionary<string, Object> query = new Dictionary<string, Object> {{ "percolate", percolateDoc }};

PercolateRequest percolateRequest = new PercolateRequest(query=query);

searchApi.Percolate("test_pq",percolateRequest);let mut percolate_doc_fields = HashMap::new();

percolate_doc_fileds.insert("title".to_string(), "what a nice bag");

let mut percolate_doc = HashMap::new();

percolate_doc.insert("document".to_string(), percolate_doc_fields);

let percolate_query = PercolateRequestQuery::new(serde_json::json!(percolate_doc));

let percolate_req = PercolateRequest::new(percolate_query);

search_api.percolate("test_pq", percolate_req).await;res = await searchApi.percolate('test_pq', { query: { percolate: { document : { title : 'What a nice bag' } } } } );query := map[string]interface{} {"title": "what a nice bag"}

percolateRequestQuery := manticoreclient.NewPercolateQuery(query)

percolateRequest := manticoreclient.NewPercolateRequest(percolateRequestQuery)

res, _, _ := apiClient.SearchAPI.Percolate(context.Background(), "test_pq").PercolateRequest(*percolateRequest).Execute()+---------------------+------------+------+---------+

| id | query | tags | filters |

+---------------------+------------+------+---------+

| 1657852401006149637 | @title bag | | |

+---------------------+------------+------+---------+{

"took": 0,

"timed_out": false,

"hits": {

"total": 1,

"max_score": 1,

"hits": [

{

"table": "products",

"_type": "doc",

"_id": 1657852401006149644,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}Array

(

[took] => 0

[timed_out] =>

[hits] => Array

(

[total] => 1

[max_score] => 1

[hits] => Array

(

[0] => Array

(

[_index] => products

[_type] => doc

[_id] => 1657852401006149644

[_score] => 1

[_source] => Array

(

[query] => Array

(

[match] => Array

(

[title] => bag

)

)

)

[fields] => Array

(

[_percolator_document_slot] => Array

(

[0] => 1

)

)

)

)

)

){'hits': {'hits': [{u'_id': u'2811025403043381480',

u'table': u'products',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'@title bag'}},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}}],

'total': 1},

'profile': None,

'timed_out': False,

'took': 0}{'hits': {'hits': [{u'_id': u'2811025403043381480',

u'table': u'products',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'@title bag'}},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}}],

'total': 1},

'profile': None,

'timed_out': False,

'took': 0}{

"took": 0,

"timed_out": false,

"hits": {

"total": 1,

"hits": [

{

"table": "products",

"_type": "doc",

"_id": 2811045522851233808,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 1

maxScore: 1

hits: [{_index=products, _type=doc, _id=2811045522851234109, _score=1, _source={query={ql=@title bag}}, fields={_percolator_document_slot=[1]}}]

aggregations: null

}

profile: null

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 1

maxScore: 1

hits: [{_index=products, _type=doc, _id=2811045522851234109, _score=1, _source={query={ql=@title bag}}, fields={_percolator_document_slot=[1]}}]

aggregations: null

}

profile: null

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 1

maxScore: 1

hits: [{_index=products, _type=doc, _id=2811045522851234109, _score=1, _source={query={ql=@title bag}}, fields={_percolator_document_slot=[1]}}]

aggregations: null

}

profile: null

}{

"took": 0,

"timed_out": false,

"hits": {

"total": 1,

"hits": [

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149661,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}{

"took": 0,

"timed_out": false,

"hits": {

"total": 1,

"hits": [

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149661,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}Note that with CALL PQ, you can provide multiple documents in different ways:

- as an array of plain documents in round brackets

('doc1', 'doc2'). This requires0 as docs_json - as an array of JSONs in round brackets

('{doc1}', '{doc2}') - or as a standard JSON array

'[{doc1}, {doc2}]'

- SQL

- JSON

- PHP

- Python

- Python-asyncio

- javascript

- Java

- C#

- Rust

- TypeScript

- Go

CALL PQ('products', ('nice pair of shoes', 'beautiful bag'), 1 as query, 0 as docs_json);

CALL PQ('products', ('{"title": "nice pair of shoes", "color": "red"}', '{"title": "beautiful bag"}'), 1 as query);

CALL PQ('products', '[{"title": "nice pair of shoes", "color": "blue"}, {"title": "beautiful bag"}]', 1 as query);POST /pq/products/search

{

"query": {

"percolate": {

"documents": [

{"title": "nice pair of shoes", "color": "blue"},

{"title": "beautiful bag"}

]

}

}

}$percolate = [

'table' => 'products',

'body' => [

'query' => [

'percolate' => [

'documents' => [

['title' => 'nice pair of shoes','color'=>'blue'],

['title' => 'beautiful bag']

]

]

]

]

];

$client->pq()->search($percolate);searchApi.percolate('products',{"query":{"percolate":{"documents":[{"title":"nice pair of shoes","color":"blue"},{"title":"beautiful bag"}]}}})await searchApi.percolate('products',{"query":{"percolate":{"documents":[{"title":"nice pair of shoes","color":"blue"},{"title":"beautiful bag"}]}}})res = await searchApi.percolate('products',{"query":{"percolate":{"documents":[{"title":"nice pair of shoes","color":"blue"},{"title":"beautiful bag"}]}}});percolateRequest = new PercolateRequest();

query = new HashMap<String,Object>(){{

put("percolate",new HashMap<String,Object >(){{

put("documents", new ArrayList<Object>(){{

add(new HashMap<String,Object >(){{

put("title","nice pair of shoes");

put("color","blue");

}});

add(new HashMap<String,Object >(){{

put("title","beautiful bag");

}});

}});

}});

}};

percolateRequest.query(query);

searchApi.percolate("products",percolateRequest);var doc1 = new Dictionary<string, Object>();

doc1.Add("title","nice pair of shoes");

doc1.Add("color","blue");

var doc2 = new Dictionary<string, Object>();

doc2.Add("title","beautiful bag");

var docs = new List<Object> {doc1, doc2};

Dictionary<string, Object> percolateDoc = new Dictionary<string, Object> {{ "documents", docs }};

Dictionary<string, Object> query = new Dictionary<string, Object> {{ "percolate", percolateDoc }};

PercolateRequest percolateRequest = new PercolateRequest(query=query);

searchApi.Percolate("products",percolateRequest);let mut percolate_doc_fields1 = HashMap::new();

percolate_doc_fields1.insert("title".to_string(), "nice pair of shoes");

percolate_doc_fields1.insert("color".to_string(), "blue");

let mut percolate_doc_fields2 = HashMap::new();

percolate_doc_fields2.insert("title".to_string(), "beautiful bag");

let mut percolate_doc_fields_list: [HashMap; 2] = [percolate_doc_fields1, percolate_doc_fields2];

let mut percolate_doc = HashMap::new();

percolate_doc.insert("documents".to_string(), percolate_doc_fields_list);

let percolate_query = PercolateRequestQuery::new(serde_json::json!(percolate_doc));

let percolate_req = PercolateRequest::new(percolate_query);

search_api.percolate("products", percolate_req).await;docs = [ {title : 'What a nice bag'}, {title : 'Really nice shoes'} ];

res = await searchApi.percolate('test_pq', { query: { percolate: { documents : docs } } } );doc1 := map[string]interface{} {"title": "What a nice bag"}

doc2 := map[string]interface{} {"title": "Really nice shoes"}

query := []interface{} {doc1, doc2}

percolateRequestQuery := manticoreclient.NewPercolateQuery(query)

percolateRequest := manticoreclient.NewPercolateRequest(percolateRequestQuery)

res, _, _ := apiClient.SearchAPI.Percolate(context.Background(), "test_pq").PercolateRequest(*percolateRequest).Execute()+---------------------+------------+------+---------+

| id | query | tags | filters |

+---------------------+------------+------+---------+

| 1657852401006149637 | @title bag | | |

+---------------------+------------+------+---------+

+---------------------+--------------+------+-------------+

| id | query | tags | filters |

+---------------------+--------------+------+-------------+

| 1657852401006149636 | @title shoes | | color='red' |

| 1657852401006149637 | @title bag | | |

+---------------------+--------------+------+-------------+

+---------------------+--------------+------+---------------------------+

| id | query | tags | filters |

+---------------------+--------------+------+---------------------------+

| 1657852401006149635 | @title shoes | | color IN ('blue, 'green') |

| 1657852401006149637 | @title bag | | |

+---------------------+--------------+------+---------------------------+{

"took": 0,

"timed_out": false,

"hits": {

"total": 2,

"max_score": 1,

"hits": [

{

"table": "products",

"_type": "doc",

"_id": 1657852401006149644,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

2

]

}

},

{

"table": "products",

"_type": "doc",

"_id": 1657852401006149646,

"_score": "1",

"_source": {

"query": {

"ql": "@title shoes"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}Array

(

[took] => 23

[timed_out] =>

[hits] => Array

(

[total] => 2

[max_score] => 1

[hits] => Array

(

[0] => Array

(

[_index] => products

[_type] => doc

[_id] => 2810781492890828819

[_score] => 1

[_source] => Array

(

[query] => Array

(

[match] => Array

(

[title] => bag

)

)

)

[fields] => Array

(

[_percolator_document_slot] => Array

(

[0] => 2

)

)

)

[1] => Array

(

[_index] => products

[_type] => doc

[_id] => 2810781492890828821

[_score] => 1

[_source] => Array

(

[query] => Array

(

[match] => Array

(

[title] => shoes

)

)

)

[fields] => Array

(

[_percolator_document_slot] => Array

(

[0] => 1

)

)

)

)

)

){'hits': {'hits': [{u'_id': u'2811025403043381494',

u'table': u'products',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'@title bag'}},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [2]}},

{u'_id': u'2811025403043381496',

u'table': u'products',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'@title shoes'}},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}}],

'total': 2},

'profile': None,

'timed_out': False,

'took': 0}{'hits': {'hits': [{u'_id': u'2811025403043381494',

u'table': u'products',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'@title bag'}},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [2]}},

{u'_id': u'2811025403043381496',

u'table': u'products',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'@title shoes'}},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}}],

'total': 2},

'profile': None,

'timed_out': False,

'took': 0}{

"took": 6,

"timed_out": false,

"hits": {

"total": 2,

"hits": [

{

"table": "products",

"_type": "doc",

"_id": 2811045522851233808,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

2

]

}

},

{

"table": "products",

"_type": "doc",

"_id": 2811045522851233810,

"_score": "1",

"_source": {

"query": {

"ql": "@title shoes"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 2

maxScore: 1

hits: [{_index=products, _type=doc, _id=2811045522851234133, _score=1, _source={query={ql=@title bag}}, fields={_percolator_document_slot=[2]}}, {_index=products, _type=doc, _id=2811045522851234135, _score=1, _source={query={ql=@title shoes}}, fields={_percolator_document_slot=[1]}}]

aggregations: null

}

profile: null

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 2

maxScore: 1

hits: [{_index=products, _type=doc, _id=2811045522851234133, _score=1, _source={query={ql=@title bag}}, fields={_percolator_document_slot=[2]}}, {_index=products, _type=doc, _id=2811045522851234135, _score=1, _source={query={ql=@title shoes}}, fields={_percolator_document_slot=[1]}}]

aggregations: null

}

profile: null

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 2

maxScore: 1

hits: [{_index=products, _type=doc, _id=2811045522851234133, _score=1, _source={query={ql=@title bag}}, fields={_percolator_document_slot=[2]}}, {_index=products, _type=doc, _id=2811045522851234135, _score=1, _source={query={ql=@title shoes}}, fields={_percolator_document_slot=[1]}}]

aggregations: null

}

profile: null

}{

"took": 0,

"timed_out": false,

"hits": {

"total": 2,

"hits": [

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149661,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

},

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149662,

"_score": "1",

"_source": {

"query": {

"ql": "@title shoes"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}{

"took": 0,

"timed_out": false,

"hits": {

"total": 2,

"hits": [

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149661,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

},

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149662,

"_score": "1",

"_source": {

"query": {

"ql": "@title shoes"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}Using the option 1 as docs allows you to see which documents of the provided ones match which rules.

- SQL

- JSON

- PHP

- Python

- Python-asyncio

- javascript

- Java

- C#

- Rust

- TypeScript

- Go

CALL PQ('products', '[{"title": "nice pair of shoes", "color": "blue"}, {"title": "beautiful bag"}]', 1 as query, 1 as docs);POST /pq/products/search

{

"query": {

"percolate": {

"documents": [

{"title": "nice pair of shoes", "color": "blue"},

{"title": "beautiful bag"}

]

}

}

}$percolate = [

'table' => 'products',

'body' => [

'query' => [

'percolate' => [

'documents' => [

['title' => 'nice pair of shoes','color'=>'blue'],

['title' => 'beautiful bag']

]

]

]

]

];

$client->pq()->search($percolate);searchApi.percolate('products',{"query":{"percolate":{"documents":[{"title":"nice pair of shoes","color":"blue"},{"title":"beautiful bag"}]}}})await searchApi.percolate('products',{"query":{"percolate":{"documents":[{"title":"nice pair of shoes","color":"blue"},{"title":"beautiful bag"}]}}})res = await searchApi.percolate('products',{"query":{"percolate":{"documents":[{"title":"nice pair of shoes","color":"blue"},{"title":"beautiful bag"}]}}});percolateRequest = new PercolateRequest();

query = new HashMap<String,Object>(){{

put("percolate",new HashMap<String,Object >(){{

put("documents", new ArrayList<Object>(){{

add(new HashMap<String,Object >(){{

put("title","nice pair of shoes");

put("color","blue");

}});

add(new HashMap<String,Object >(){{

put("title","beautiful bag");

}});

}});

}});

}};

percolateRequest.query(query);

searchApi.percolate("products",percolateRequest);var doc1 = new Dictionary<string, Object>();

doc1.Add("title","nice pair of shoes");

doc1.Add("color","blue");

var doc2 = new Dictionary<string, Object>();

doc2.Add("title","beautiful bag");

var docs = new List<Object> {doc1, doc2};

Dictionary<string, Object> percolateDoc = new Dictionary<string, Object> {{ "documents", docs }};

Dictionary<string, Object> query = new Dictionary<string, Object> {{ "percolate", percolateDoc }};

PercolateRequest percolateRequest = new PercolateRequest(query=query);

searchApi.Percolate("products",percolateRequest);let mut percolate_doc_fields1 = HashMap::new();

percolate_doc_fields1.insert("title".to_string(), "nice pair of shoes");

percolate_doc_fields1.insert("color".to_string(), "blue");

let mut percolate_doc_fields2 = HashMap::new();

percolate_doc_fields2.insert("title".to_string(), "beautiful bag");

let mut percolate_doc_fields_list: [HashMap; 2] = [percolate_doc_fields1, percolate_doc_fields2];

let mut percolate_doc = HashMap::new();

percolate_doc.insert("documents".to_string(), percolate_doc_fields_list);

let percolate_query = PercolateRequestQuery::new(serde_json::json!(percolate_doc));

let percolate_req = PercolateRequest::new(percolate_query);

search_api.percolate("products", percolate_req).await;docs = [ {title : 'What a nice bag'}, {title : 'Really nice shoes'} ];

res = await searchApi.percolate('test_pq', { query: { percolate: { documents : docs } } } );doc1 := map[string]interface{} {"title": "What a nice bag"}

doc2 := map[string]interface{} {"title": "Really nice shoes"}

query := []interface{} {doc1, doc2}

percolateRequestQuery := manticoreclient.NewPercolateQuery(query)

percolateRequest := manticoreclient.NewPercolateRequest(percolateRequestQuery)

res, _, _ := apiClient.SearchAPI.Percolate(context.Background(), "test_pq").PercolateRequest(*percolateRequest).Execute()+---------------------+-----------+--------------+------+---------------------------+

| id | documents | query | tags | filters |

+---------------------+-----------+--------------+------+---------------------------+

| 1657852401006149635 | 1 | @title shoes | | color IN ('blue, 'green') |

| 1657852401006149637 | 2 | @title bag | | |

+---------------------+-----------+--------------+------+---------------------------+{

"took": 0,

"timed_out": false,

"hits": {

"total": 2,

"max_score": 1,

"hits": [

{

"table": "products",

"_type": "doc",

"_id": 1657852401006149644,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

2

]

}

},

{

"table": "products",

"_type": "doc",

"_id": 1657852401006149646,

"_score": "1",

"_source": {

"query": {

"ql": "@title shoes"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}Array

(

[took] => 23

[timed_out] =>

[hits] => Array

(

[total] => 2

[max_score] => 1

[hits] => Array

(

[0] => Array

(

[_index] => products

[_type] => doc

[_id] => 2810781492890828819

[_score] => 1

[_source] => Array

(

[query] => Array

(

[match] => Array

(

[title] => bag

)

)

)

[fields] => Array

(

[_percolator_document_slot] => Array

(

[0] => 2

)

)

)

[1] => Array

(

[_index] => products

[_type] => doc

[_id] => 2810781492890828821

[_score] => 1

[_source] => Array

(

[query] => Array

(

[match] => Array

(

[title] => shoes

)

)

)

[fields] => Array

(

[_percolator_document_slot] => Array

(

[0] => 1

)

)

)

)

)

){'hits': {'hits': [{u'_id': u'2811025403043381494',

u'table': u'products',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'@title bag'}},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [2]}},

{u'_id': u'2811025403043381496',

u'table': u'products',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'@title shoes'}},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}}],

'total': 2},

'profile': None,

'timed_out': False,

'took': 0}{'hits': {'hits': [{u'_id': u'2811025403043381494',

u'table': u'products',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'@title bag'}},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [2]}},

{u'_id': u'2811025403043381496',

u'table': u'products',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'@title shoes'}},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}}],

'total': 2},

'profile': None,

'timed_out': False,

'took': 0}{

"took": 6,

"timed_out": false,

"hits": {

"total": 2,

"hits": [

{

"table": "products",

"_type": "doc",

"_id": 2811045522851233808,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

2

]

}

},

{

"table": "products",

"_type": "doc",

"_id": 2811045522851233810,

"_score": "1",

"_source": {

"query": {

"ql": "@title shoes"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 2

maxScore: 1

hits: [{_index=products, _type=doc, _id=2811045522851234133, _score=1, _source={query={ql=@title bag}}, fields={_percolator_document_slot=[2]}}, {_index=products, _type=doc, _id=2811045522851234135, _score=1, _source={query={ql=@title shoes}}, fields={_percolator_document_slot=[1]}}]

aggregations: null

}

profile: null

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 2

maxScore: 1

hits: [{_index=products, _type=doc, _id=2811045522851234133, _score=1, _source={query={ql=@title bag}}, fields={_percolator_document_slot=[2]}}, {_index=products, _type=doc, _id=2811045522851234135, _score=1, _source={query={ql=@title shoes}}, fields={_percolator_document_slot=[1]}}]

aggregations: null

}

profile: null

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 2

maxScore: 1

hits: [{_index=products, _type=doc, _id=2811045522851234133, _score=1, _source={query={ql=@title bag}}, fields={_percolator_document_slot=[2]}}, {_index=products, _type=doc, _id=2811045522851234135, _score=1, _source={query={ql=@title shoes}}, fields={_percolator_document_slot=[1]}}]

aggregations: null

}

profile: null

}{

"took": 0,

"timed_out": false,

"hits": {

"total": 2,

"hits": [

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149661,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

},

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149662,

"_score": "1",

"_source": {

"query": {

"ql": "@title shoes"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}{

"took": 0,

"timed_out": false,

"hits": {

"total": 2,

"hits": [

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149661,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

},

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149662,

"_score": "1",

"_source": {

"query": {

"ql": "@title shoes"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}By default, matching document ids correspond to their relative numbers in the list you provide. However, in some cases, each document already has its own id. For this case, there's an option 'id field name' as docs_id for CALL PQ.

Note that if the id cannot be found by the provided field name, the PQ rule will not be shown in the results.

This option is only available for CALL PQ via SQL.

- SQL

CALL PQ('products', '[{"id": 123, "title": "nice pair of shoes", "color": "blue"}, {"id": 456, "title": "beautiful bag"}]', 1 as query, 'id' as docs_id, 1 as docs);+---------------------+-----------+--------------+------+---------------------------+

| id | documents | query | tags | filters |

+---------------------+-----------+--------------+------+---------------------------+

| 1657852401006149664 | 456 | @title bag | | |

| 1657852401006149666 | 123 | @title shoes | | color IN ('blue, 'green') |

+---------------------+-----------+--------------+------+---------------------------+When using CALL PQ with separate JSONs, you can use the option 1 as skip_bad_json to skip any invalid JSONs in the input. In the example below, the 2nd query fails due to an invalid JSON, but the 3rd query avoids the error by using 1 as skip_bad_json. Keep in mind that this option is not available when sending JSON queries over HTTP, as the whole JSON query must be valid in that case.

- SQL

CALL PQ('products', ('{"title": "nice pair of shoes", "color": "blue"}', '{"title": "beautiful bag"}'));

CALL PQ('products', ('{"title": "nice pair of shoes", "color": "blue"}', '{"title": "beautiful bag}'));

CALL PQ('products', ('{"title": "nice pair of shoes", "color": "blue"}', '{"title": "beautiful bag}'), 1 as skip_bad_json);+---------------------+

| id |

+---------------------+

| 1657852401006149635 |

| 1657852401006149637 |

+---------------------+

ERROR 1064 (42000): Bad JSON objects in strings: 2

+---------------------+

| id |

+---------------------+

| 1657852401006149635 |

+---------------------+Percolate queries are designed with high throughput and large data volumes in mind. To optimize performance for lower latency and higher throughput, consider the following.

There are two modes of distribution for a percolate table and how a percolate query can work against it:

- Sparse (default). Ideal for: many documents, mirrored PQ tables. When your document set is large but the set of queries stored in the PQ table is small, the sparse mode is beneficial. In this mode, the batch of documents you pass will be divided among the number of agents, so each node processes only a portion of the documents from your request. Manticore splits your document set and distributes chunks among the mirrors. Once the agents have finished processing the queries, Manticore collects and merges the results, returning a final query set as if it came from a single table. Use replication to assist the process.

- Sharded. Ideal for: many PQ rules, rules split among PQ tables. In this mode, the entire document set is broadcast to all tables of the distributed PQ table without initially splitting the documents. This is beneficial when pushing a relatively small set of documents, but the number of stored queries is large. In this case, it's more appropriate to store only a portion of PQ rules on each node and then merge the results returned from the nodes that process the same set of documents against different sets of PQ rules. This mode must be explicitly set, as it implies an increase in network payload and expects tables with different PQs, which replication cannot do out-of-the-box.

Assume you have table pq_d2 defined as:

table pq_d2

{

type = distributed

agent = 127.0.0.1:6712:pq

agent = 127.0.0.1:6712:ptitle

}Each of 'pq' and 'ptitle' contains:

- SQL

- JSON

- PHP

- Python

- Python-asyncio

- javascript

- Java

- C#

- Rust

- TypeScript

- Go

SELECT * FROM pq;POST /pq/pq/search$params = [

'table' => 'pq',

'body' => [

]

];

$response = $client->pq()->search($params);searchApi.search({"table":"pq","query":{"match_all":{}}})await searchApi.search({"table":"pq","query":{"match_all":{}}})res = await searchApi.search({"table":"pq","query":{"match_all":{}}});Map<String,Object> query = new HashMap<String,Object>();

query.put("match_all",null);

SearchRequest searchRequest = new SearchRequest();

searchRequest.setIndex("pq");

searchRequest.setQuery(query);

SearchResponse searchResponse = searchApi.search(searchRequest);object query = new { match_all=null };

SearchRequest searchRequest = new SearchRequest("pq", query);

SearchResponse searchResponse = searchApi.Search(searchRequest);let query = SearchQuery::new();

let search_req = SearchRequest {

table: "pq".to_string(),

query: Some(Box::new(query)),

..Default::default(),

};

let search_res = search_api.search(search_req).await;res = await searchApi.search({"table":"test_pq","query":{"match_all":{}}});query := map[string]interface{} {}

percolateRequestQuery := manticoreclient.NewPercolateRequestQuery(query)

percolateRequest := manticoreclient.NewPercolateRequest(percolateRequestQuery)

res, _, _ := apiClient.SearchAPI.Percolate(context.Background(), "test_pq").PercolateRequest(*percolateRequest).Execute()+------+-------------+------+-------------------+

| id | query | tags | filters |

+------+-------------+------+-------------------+

| 1 | filter test | | gid>=10 |

| 2 | angry | | gid>=10 OR gid<=3 |

+------+-------------+------+-------------------+

2 rows in set (0.01 sec){

"took":0,

"timed_out":false,

"hits":{

"total":2,

"hits":[

{

"_id": 1,

"_score":1,

"_source":{

"query":{ "ql":"filter test" },

"tags":"",

"filters":"gid>=10"

}

},

{

"_id": 2,

"_score":1,

"_source":{

"query":{"ql":"angry"},

"tags":"",

"filters":"gid>=10 OR gid<=3"

}

}

]

}

}(

[took] => 0

[timed_out] =>

[hits] =>

(

[total] => 2

[hits] =>

(

[0] =>

(

[_id] => 1

[_score] => 1

[_source] =>

(

[query] =>

(

[ql] => filter test

)

[tags] =>

[filters] => gid>=10

)

),

[1] =>

(

[_id] => 1

[_score] => 1

[_source] =>

(

[query] =>

(

[ql] => angry

)

[tags] =>

[filters] => gid>=10 OR gid<=3

)

)

)

)

){'hits': {'hits': [{u'_id': u'2811025403043381501',

u'_score': 1,

u'_source': {u'filters': u"gid>=10",

u'query': u'filter test',

u'tags': u''}},

{u'_id': u'2811025403043381502',

u'_score': 1,

u'_source': {u'filters': u"gid>=10 OR gid<=3",

u'query': u'angry',

u'tags': u''}}],

'total': 2},

'profile': None,

'timed_out': False,

'took': 0}{'hits': {'hits': [{u'_id': u'2811025403043381501',

u'_score': 1,

u'_source': {u'filters': u"gid>=10",

u'query': u'filter test',

u'tags': u''}},

{u'_id': u'2811025403043381502',

u'_score': 1,

u'_source': {u'filters': u"gid>=10 OR gid<=3",

u'query': u'angry',

u'tags': u''}}],

'total': 2},

'profile': None,

'timed_out': False,

'took': 0}{"hits": {"hits": [{"_id": 2811025403043381501,

"_score": 1,

"_source": {"filters": u"gid>=10",

"query": "filter test",

"tags": ""}},

{"_id": 2811025403043381502,

"_score": 1,

"_source": {"filters": u"gid>=10 OR gid<=3",

"query": "angry",

"tags": ""}}],

"total": 2},

"timed_out": false,

"took": 0}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 2

maxScore: null

hits: [{_id=2811045522851233962, _score=1, _source={filters=gid>=10, query=filter test, tags=}}, {_id=2811045522851233951, _score=1, _source={filters=gid>=10 OR gid<=3, query=angry,tags=}}]

aggregations: null

}

profile: null

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 2

maxScore: null

hits: [{_id=2811045522851233962, _score=1, _source={filters=gid>=10, query=filter test, tags=}}, {_id=2811045522851233951, _score=1, _source={filters=gid>=10 OR gid<=3, query=angry,tags=}}]

aggregations: null

}

profile: null

}class SearchResponse {

took: 0

timedOut: false

hits: class SearchResponseHits {

total: 2

maxScore: null

hits: [{_id=2811045522851233962, _score=1, _source={filters=gid>=10, query=filter test, tags=}}, {_id=2811045522851233951, _score=1, _source={filters=gid>=10 OR gid<=3, query=angry,tags=}}]

aggregations: null

}

profile: null

}{

'hits':

{

'hits':

[{

'_id': '2811025403043381501',

'_score': 1,

'_source':

{

'filters': "gid>=10",

'query': 'filter test',

'tags': ''

}

},

{

'_id':

'2811025403043381502',

'_score': 1,

'_source':

{

'filters': "gid>=10 OR gid<=3",

'query': 'angry',

'tags': ''

}

}],

'total': 2

},

'profile': None,

'timed_out': False,

'took': 0

}{

'hits':

{

'hits':

[{

'_id': '2811025403043381501',

'_score': 1,

'_source':

{

'filters': "gid>=10",

'query': 'filter test',

'tags': ''

}

},

{

'_id':

'2811025403043381502',

'_score': 1,

'_source':

{

'filters': "gid>=10 OR gid<=3",

'query': 'angry',

'tags': ''

}

}],

'total': 2

},

'profile': None,

'timed_out': False,

'took': 0

}And you execute CALL PQ on the distributed table with a couple of documents.

- SQL

- JSON

- PHP

- Python

- Python-asyncio

- javascript

- Java

- C#

- Rust

- TypeScript

- Go

CALL PQ ('pq_d2', ('{"title":"angry test", "gid":3 }', '{"title":"filter test doc2", "gid":13}'), 1 AS docs);POST /pq/pq/search -d '

"query":

{

"percolate":

{

"documents" : [

{ "title": "angry test", "gid": 3 },

{ "title": "filter test doc2", "gid": 13 }

]

}

}

'$params = [

'table' => 'pq',

'body' => [

'query' => [

'percolate' => [

'documents' => [

[

'title'=>'angry test',

'gid' => 3

],

[

'title'=>'filter test doc2',

'gid' => 13

],

]

]

]

]

];

$response = $client->pq()->search($params);searchApi.percolate('pq',{"percolate":{"documents":[{"title":"angry test","gid":3},{"title":"filter test doc2","gid":13}]}})await searchApi.percolate('pq',{"percolate":{"documents":[{"title":"angry test","gid":3},{"title":"filter test doc2","gid":13}]}})res = await searchApi.percolate('pq',{"percolate":{"documents":[{"title":"angry test","gid":3},{"title":"filter test doc2","gid":13}]}});percolateRequest = new PercolateRequest();

query = new HashMap<String,Object>(){{

put("percolate",new HashMap<String,Object >(){{

put("documents", new ArrayList<Object>(){{

add(new HashMap<String,Object >(){{

put("title","angry test");

put("gid",3);

}});

add(new HashMap<String,Object >(){{

put("title","filter test doc2");

put("gid",13);

}});

}});

}});

}};

percolateRequest.query(query);

searchApi.percolate("pq",percolateRequest);var doc1 = new Dictionary<string, Object>();

doc1.Add("title","angry test");

doc1.Add("gid",3);

var doc2 = new Dictionary<string, Object>();

doc2.Add("title","filter test doc2");

doc2.Add("gid",13);

var docs = new List<Object> {doc1, doc2};

Dictionary<string, Object> percolateDoc = new Dictionary<string, Object> {{ "documents", docs }};

Dictionary<string, Object> query = new Dictionary<string, Object> {{ "percolate", percolateDoc }};

PercolateRequest percolateRequest = new PercolateRequest(query=query);

searchApi.Percolate("pq",percolateRequest);let mut percolate_doc_fields1 = HashMap::new();

percolate_doc_fields1.insert("title".to_string(), "angry test");

percolate_doc_fields1.insert("gid".to_string(), 3);

let mut percolate_doc_fields2 = HashMap::new();

percolate_doc_fields2.insert("title".to_string(), "filter test doc2");

percolate_doc_fields2.insert("gid".to_string(), 13);

let mut percolate_doc_fields_list: [HashMap; 2] = [percolate_doc_fields1, percolate_doc_fields2];

let mut percolate_doc = HashMap::new();

percolate_doc.insert("documents".to_string(), percolate_doc_fields_list);

let percolate_query = PercolateRequestQuery::new(serde_json::json!(percolate_doc));

let percolate_req = PercolateRequest::new(percolate_query);

search_api.percolate("pq", percolate_req).await;docs = [ {title : 'What a nice bag'}, {title : 'Really nice shoes'} ];

res = await searchApi.percolate('test_pq', { query: { percolate: { documents : docs } } } );doc1 := map[string]interface{} {"title": "What a nice bag"}

doc2 := map[string]interface{} {"title": "Really nice shoes"}

query := []interface{} {doc1, doc2}

percolateRequestQuery := manticoreclient.NewPercolateQuery(query)

percolateRequest := manticoreclient.NewPercolateRequest(percolateRequestQuery)

res, _, _ := apiClient.SearchAPI.Percolate(context.Background(), "test_pq").PercolateRequest(*percolateRequest).Execute()+------+-----------+

| id | documents |

+------+-----------+

| 1 | 2 |

| 2 | 1 |

+------+-----------+{

"took":0,

"timed_out":false,

"hits":{

"total":2,"hits":[

{

"_id": 2,

"_score":1,

"_source":{

"query":{"title":"angry"},

"tags":"",

"filters":"gid>=10 OR gid<=3"

}

}

{

"_id": 1,

"_score":1,

"_source":{

"query":{"ql":"filter test"},

"tags":"",

"filters":"gid>=10"

}

},

]

}

}(

[took] => 0

[timed_out] =>

[hits] =>

(

[total] => 2

[hits] =>

(

[0] =>

(

[_index] => pq

[_type] => doc

[_id] => 2

[_score] => 1

[_source] =>

(

[query] =>

(

[ql] => angry

)

[tags] =>

[filters] => gid>=10 OR gid<=3

),

[fields] =>

(

[_percolator_document_slot] =>

(

[0] => 1

)

)

),

[1] =>

(

[_index] => pq

[_id] => 1

[_score] => 1

[_source] =>

(

[query] =>

(

[ql] => filter test

)

[tags] =>

[filters] => gid>=10

)

[fields] =>

(

[_percolator_document_slot] =>

(

[0] => 0

)

)

)

)

)

){'hits': {'hits': [{u'_id': u'2811025403043381480',

u'table': u'pq',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'angry'},u'tags':u'',u'filters':u"gid>=10 OR gid<=3"},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}},

{u'_id': u'2811025403043381501',

u'table': u'pq',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'filter test'},u'tags':u'',u'filters':u"gid>=10"},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}}],

'total': 2},

'profile': None,

'timed_out': False,

'took': 0}{'hits': {'hits': [{u'_id': u'2811025403043381480',

u'table': u'pq',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'angry'},u'tags':u'',u'filters':u"gid>=10 OR gid<=3"},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}},

{u'_id': u'2811025403043381501',

u'table': u'pq',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'filter test'},u'tags':u'',u'filters':u"gid>=10"},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}}],

'total': 2},

'profile': None,

'timed_out': False,

'took': 0}{'hits': {'hits': [{u'_id': u'2811025403043381480',

u'table': u'pq',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'angry'},u'tags':u'',u'filters':u"gid>=10 OR gid<=3"},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}},

{u'_id': u'2811025403043381501',

u'table': u'pq',

u'_score': u'1',

u'_source': {u'query': {u'ql': u'filter test'},u'tags':u'',u'filters':u"gid>=10"},

u'_type': u'doc',

u'fields': {u'_percolator_document_slot': [1]}}],

'total': 2},

'profile': None,

'timed_out': False,

'took': 0}class SearchResponse {

took: 10

timedOut: false

hits: class SearchResponseHits {

total: 2

maxScore: 1

hits: [{_index=pq, _type=doc, _id=2811045522851234165, _score=1, _source={query={ql=@title angry}}, fields={_percolator_document_slot=[1]}}, {_index=pq, _type=doc, _id=2811045522851234166, _score=1, _source={query={ql=@title filter test doc2}}, fields={_percolator_document_slot=[2]}}]

aggregations: null

}

profile: null

}class SearchResponse {

took: 10

timedOut: false

hits: class SearchResponseHits {

total: 2

maxScore: 1

hits: [{_index=pq, _type=doc, _id=2811045522851234165, _score=1, _source={query={ql=@title angry}}, fields={_percolator_document_slot=[1]}}, {_index=pq, _type=doc, _id=2811045522851234166, _score=1, _source={query={ql=@title filter test doc2}}, fields={_percolator_document_slot=[2]}}]

aggregations: null

}

profile: null

}class SearchResponse {

took: 10

timedOut: false

hits: class SearchResponseHits {

total: 2

maxScore: 1

hits: [{_index=pq, _type=doc, _id=2811045522851234165, _score=1, _source={query={ql=@title angry}}, fields={_percolator_document_slot=[1]}}, {_index=pq, _type=doc, _id=2811045522851234166, _score=1, _source={query={ql=@title filter test doc2}}, fields={_percolator_document_slot=[2]}}]

aggregations: null

}

profile: null

}{

"took": 0,

"timed_out": false,

"hits": {

"total": 2,

"hits": [

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149661,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

},

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149662,

"_score": "1",

"_source": {

"query": {

"ql": "@title shoes"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}{

"took": 0,

"timed_out": false,

"hits": {

"total": 2,

"hits": [

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149661,

"_score": "1",

"_source": {

"query": {

"ql": "@title bag"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

},

{

"table": "test_pq",

"_type": "doc",

"_id": 1657852401006149662,

"_score": "1",

"_source": {

"query": {

"ql": "@title shoes"

}

},

"fields": {

"_percolator_document_slot": [

1

]

}

}

]

}

}In the previous example, we used the default sparse mode. To demonstrate the sharded mode, let's create a distributed PQ table consisting of 2 local PQ tables and add 2 documents to "products1" and 1 document to "products2":

create table products1(title text, color string) type='pq';

create table products2(title text, color string) type='pq';

create table products_distributed type='distributed' local='products1' local='products2';

INSERT INTO products1(query) values('@title bag');

INSERT INTO products1(query,filters) values('@title shoes', 'color=\'red\'');

INSERT INTO products2(query,filters) values('@title shoes', 'color in (\'blue\', \'green\')');Now, if you add 'sharded' as mode to CALL PQ, it will send the documents to all the agent's tables (in this case, just local tables, but they can be remote to utilize external hardware). This mode is not available via the JSON interface.

- SQL

CALL PQ('products_distributed', ('{"title": "nice pair of shoes", "color": "blue"}', '{"title": "beautiful bag"}'), 'sharded' as mode, 1 as query);+---------------------+--------------+------+---------------------------+

| id | query | tags | filters |

+---------------------+--------------+------+---------------------------+

| 1657852401006149639 | @title bag | | |

| 1657852401006149643 | @title shoes | | color IN ('blue, 'green') |

+---------------------+--------------+------+---------------------------+Note that the syntax of agent mirrors in the configuration (when several hosts are assigned to one agent line, separated with |) has nothing to do with the CALL PQ query mode. Each agent always represents one node, regardless of the number of HA mirrors specified for that agent.

In some cases, you might want to get more details about the performance of a percolate query. For that purpose, there is the option 1 as verbose, which is only available via SQL and allows you to save more performance metrics. You can see them using the SHOW META query, which you can run after CALL PQ. See SHOW META for more info.

- 1 as verbose

- 0 as verbose

CALL PQ('products', ('{"title": "nice pair of shoes", "color": "blue"}', '{"title": "beautiful bag"}'), 1 as verbose); show meta;CALL PQ('products', ('{"title": "nice pair of shoes", "color": "blue"}', '{"title": "beautiful bag"}'), 0 as verbose); show meta;+---------------------+

| id |

+---------------------+

| 1657852401006149644 |

| 1657852401006149646 |

+---------------------+

+-------------------------+-----------+

| Variable name | Value |

+-------------------------+-----------+

| total | 0.000 sec |

| setup | 0.000 sec |

| queries_matched | 2 |

| queries_failed | 0 |

| document_matched | 2 |

| total_queries_stored | 3 |

| term_only_queries | 3 |

| fast_rejected_queries | 0 |

| time_per_query | 27, 10 |

| time_of_matched_queries | 37 |

+-------------------------+-----------++---------------------+

| id |

+---------------------+

| 1657852401006149644 |

| 1657852401006149646 |

+---------------------+

+-----------------------+-----------+

| Variable name | Value |

+-----------------------+-----------+

| total | 0.000 sec |

| queries_matched | 2 |

| queries_failed | 0 |

| document_matched | 2 |

| total_queries_stored | 3 |

| term_only_queries | 3 |

| fast_rejected_queries | 0 |

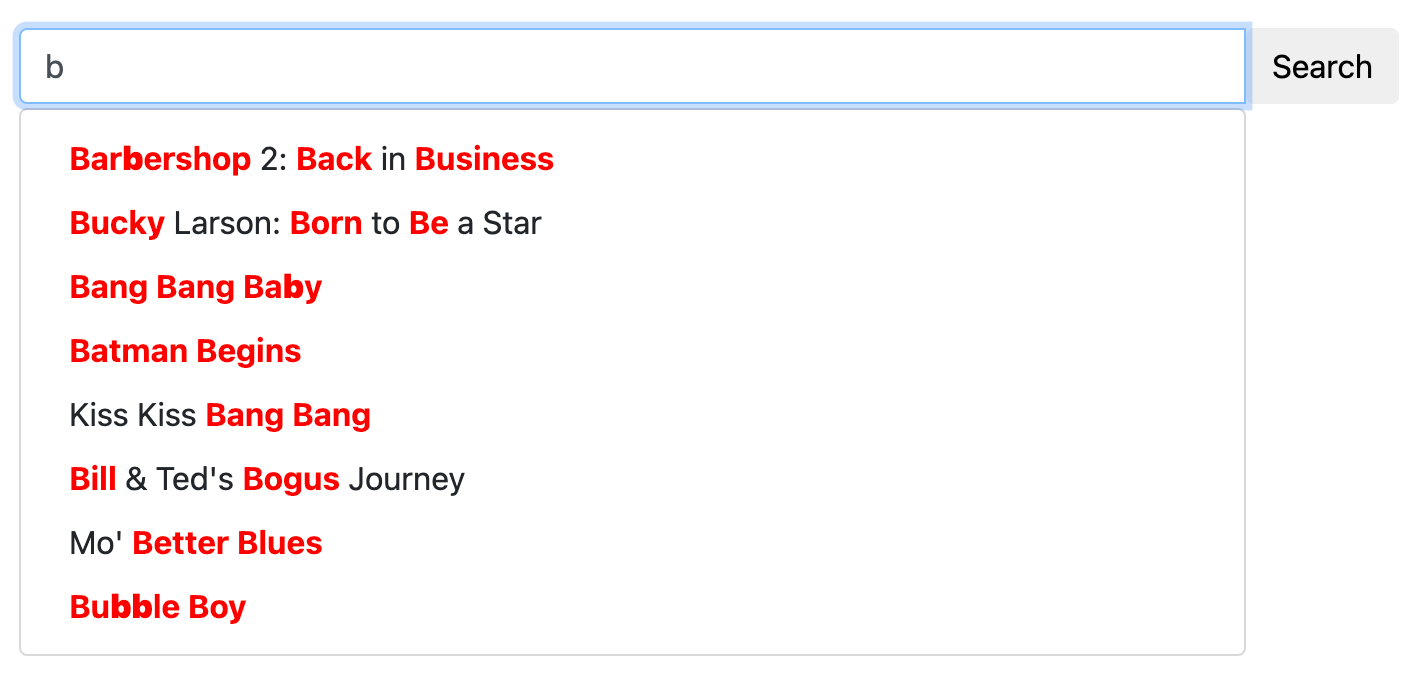

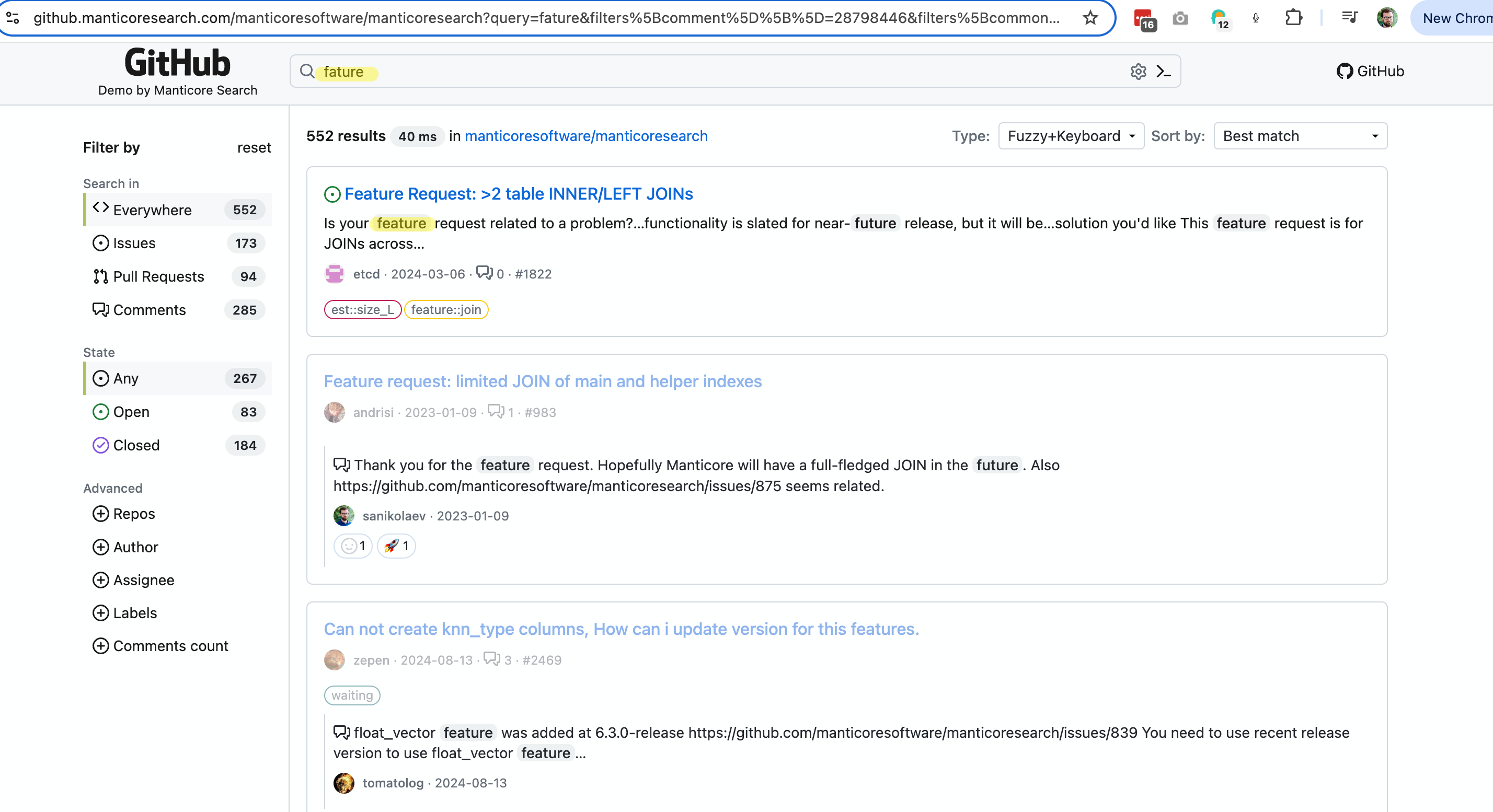

+-----------------------+-----------+Autocomplete, or word completion, predicts and suggests the end of a word or phrase as you type. It's commonly used in:

- Search boxes on websites

- Suggestions in search engines

- Text fields in apps

Manticore offers an advanced autocomplete feature that gives suggestions while you type, similar to those in well-known search engines. This helps speed up searches and lets users find what they need faster.

In addition to basic autocomplete functionality, Manticore includes advanced features to enhance the user experience:

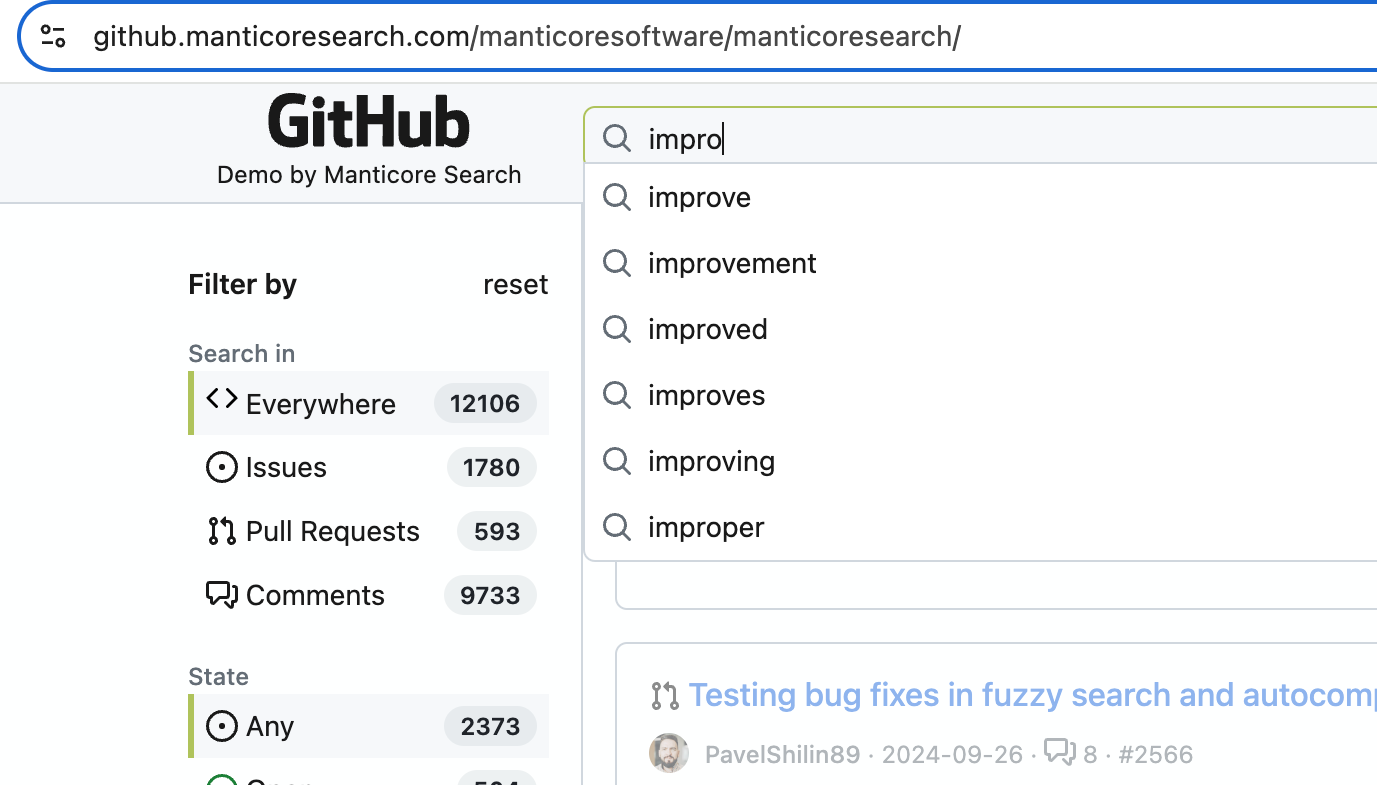

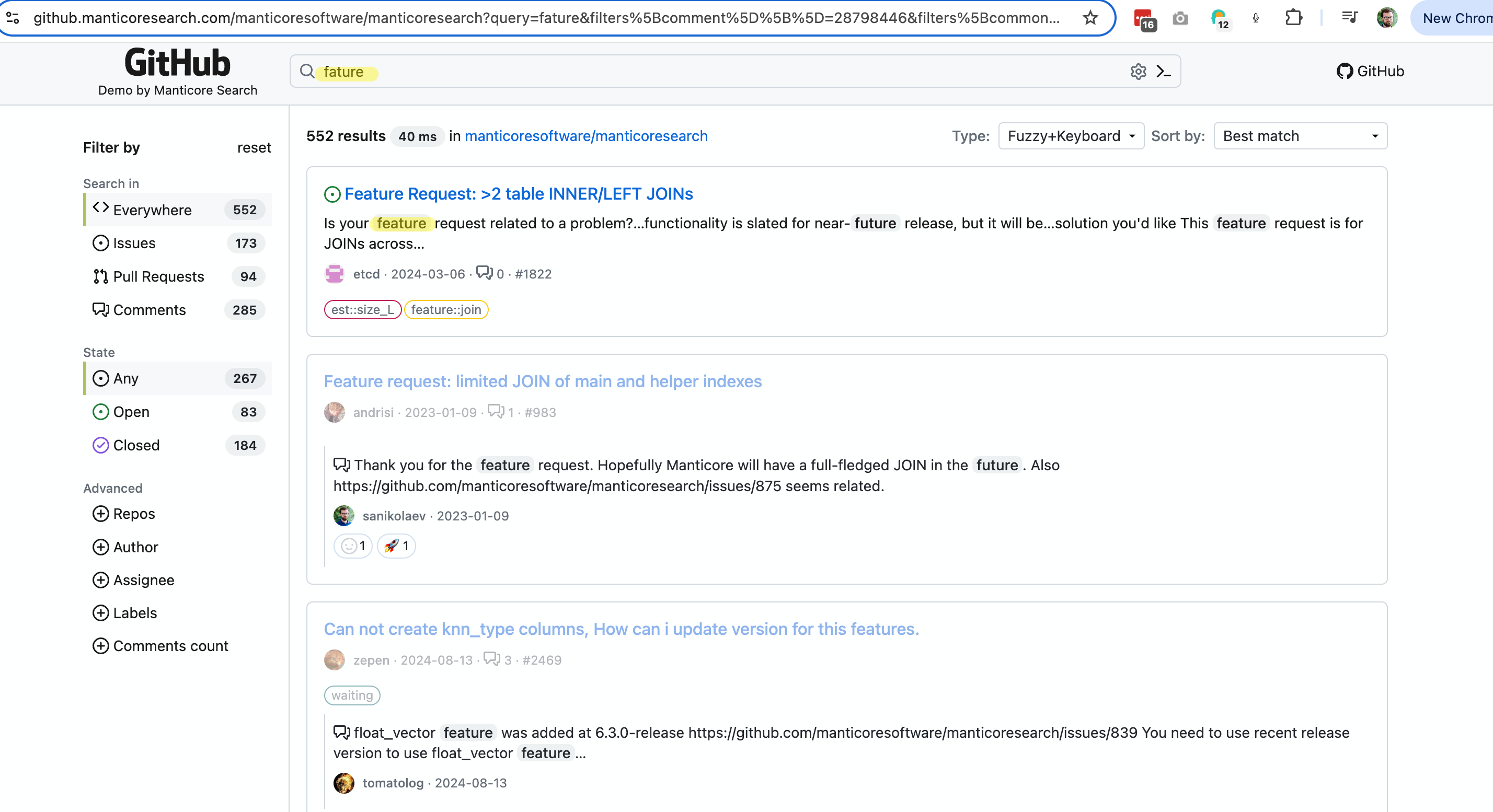

- Spell Correction (Fuzziness): Manticore's autocomplete helps correct spelling mistakes by using algorithms that recognize and fix common errors. This means even if you type something wrong, you can still find what you were looking for.

- Keyboard Layout Autodetection: Manticore can figure out which keyboard layout you are using. This is really useful in places where many languages are used, or if you accidentally type in the wrong language. For example, if you type "ghbdtn" by mistake, Manticore knows you meant to say "привет" (hello in Russian) and suggests the correct word.

Manticore's autocomplete can be tailored to match different needs and settings, making it a flexible tool for many applications.

NOTE:

CALL AUTOCOMPLETEand/autocompleterequire Manticore Buddy. If it doesn't work, make sure Buddy is installed.

To use autocomplete in Manticore, use the CALL AUTOCOMPLETE SQL statement or its JSON equivalent /autocomplete. This feature provides word completion suggestions based on your indexed data.

Before you proceed, ensure that the table you intend to use for autocomplete has infixes enabled.

Note: There's an automatic check for min_infix_len in the table settings, which uses a 30-second cache to improve the performance of CALL AUTOCOMPLETE. After making changes to your table, there may be a brief delay the first time you use CALL AUTOCOMPLETE (though this is usually not noticeable). Only successful results are cached, so if you remove the table or disable min_infix_len, CALL AUTOCOMPLETE may temporarily return incorrect results until it eventually starts showing an error related to min_infix_len.

CALL AUTOCOMPLETE('query_beginning', 'table', [...options]);POST /autocomplete

{

"table":"table_name",

"query":"query_beginning"

[,"options": {<autocomplete options>}]

}layouts: A comma-separated string of keyboard layout codes for detecting typing errors caused by keyboard layout mismatches (e.g., typing "ghbdtn" instead of "привет" when using wrong layout). Manticore compares character positions across different layouts to suggest corrections. Requires at least 2 layouts to effectively detect mismatches. Available options: us, ru, ua, se, pt, no, it, gr, uk, fr, es, dk, de, ch, br, bg, be (more details here). Default: nonefuzziness:0,1, or2(default:2). Maximum Levenshtein distance for finding typos. Set to0to disable fuzzy matchingpreserve:0or1(default:0). When set to1, keeps words that don't have fuzzy matches in the search results (e.g., "hello wrld" returns both "hello wrld" and "hello world"). When set to0, only returns words with successful fuzzy matches (e.g., "hello wrld" returns only "hello world"). Particularly useful for preserving short words or proper nouns that may not exist in Manticore Searchprepend: Boolean (0/1 in SQL). If true(1), adds an asterisk before the last word for prefix expansion (e.g.,*word)append: Boolean (0/1 in SQL). If true(1), adds an asterisk after the last word for suffix expansion (e.g.,word*)expansion_len: Number of characters to expand in the last word. Default:10force_bigrams: Boolean (0/1 in SQL). Forces the use of bigrams (2-character n-grams) instead of trigrams for all word lengths, which can improve matching for words with transposition errors. Default:0(use trigrams for words ≥6 characters)

- SQL

- SQL with no fuzzy search

- JSON

- SQL with preserve option

- JSON with preserve option

mysql> CALL AUTOCOMPLETE('hello', 'comment');

+------------+

| query |

+------------+

| hello |

| helio |

| hell |

| shell |

| nushell |

| powershell |

| well |

| help |