Manticore Search is a high-performance, multi-storage database purpose-built for search and analytics, offering lightning-fast full-text search, real-time indexing, and advanced features like vector search and columnar storage for efficient data analysis. Designed to handle both small and large datasets, it delivers seamless scalability and powerful insights for modern applications.

As an open-source database (available on GitHub), Manticore Search was created in 2017 as a continuation of Sphinx Search engine. Our development team took all the best features of Sphinx and significantly improved its functionality, fixing hundreds of bugs along the way (as detailed in our Changelog). Manticore Search is a modern, fast, and lightweight database with exceptional full-text search capabilities, built on a nearly complete rewrite of its predecessor.

- Query autocomplete

- Fuzzy search

- Over 20 full-text operators and over 20 ranking factors

- Custom ranking

- Stemming

- Lemmatization

- Stopwords

- Synonyms

- Wordforms

- Advanced tokenization at character and word level

- Proper Chinese segmentation

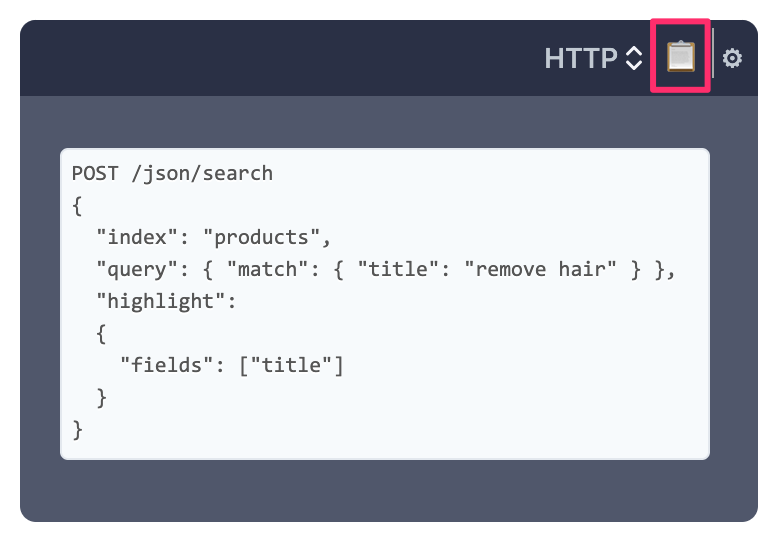

- Text highlighting

Manticore Search supports the ability to add embeddings generated by your Machine Learning models to each document, and then doing a nearest-neighbor search on them. This lets you build features like similarity search, recommendations, semantic search, and relevance ranking based on NLP algorithms, among others, including image, video, and sound searches.

Manticore Search supports JOIN queries via SQL and JSON, allowing you to combine data from multiple tables.

Manticore Search utilizes a smart query parallelization to lower response time and fully utilize all CPU cores when needed.

The cost-based query optimizer uses statistical data about the indexed data to evaluate the relative costs of different execution plans for a given query. This allows the optimizer to determine the most efficient plan for retrieving the desired results, taking into account factors such as the size of the indexed data, the complexity of the query, and the available resources.

Manticore offers both row-wise and column-oriented storage options to accommodate datasets of various sizes. The traditional and default row-wise storage option is available for datasets of all sizes - small, medium, and large, while the columnar storage option is provided through the Manticore Columnar Library for even larger datasets. The key difference between these storage options is that row-wise storage requires all attributes (excluding full-text fields) to be kept in RAM for optimal performance, while columnar storage does not, thus offering lower RAM consumption, but with a potential for slightly slower performance (as demonstrated by the statistics on https://db-benchmarks.com/).

Manticore Columnar Library uses Piecewise Geometric Model index, which exploits a learned mapping between the indexed keys and their location in memory. The succinctness of this mapping, coupled with a peculiar recursive construction algorithm, makes the PGM-index a data structure that dominates traditional indexes by orders of magnitude in space while still offering the best query and update time performance. Secondary indexes are ON by default for all numeric and string fields and can be enabled for json attributes.

Manticore's native syntax is SQL and it supports SQL over HTTP and MySQL protocol, allowing for connection through popular mysql clients in any programming language.

For a more programmatic approach to managing data and schemas, Manticore provides HTTP JSON protocol, similar to that of Elasticsearch.

You can execute Elasticsearch-compatible insert and replace JSON queries which enables using Manticore with tools like Logstash (version < 7.13), Filebeat and other tools from the Beats family.

Easily create, update, and delete tables online or through a configuration file.

The Manticore Search daemon is developed in C++, offering fast start times and efficient memory utilization. Low-level optimizations further enhance performance. Another crucial component, called Manticore Buddy, is written in PHP and is utilized for high-level functionality that does not require lightning-fast response times or extremely high processing power. Although contributing to the C++ code may pose a challenge, adding a new SQL/JSON command using Manticore Buddy should be a straightforward process.

Newly added or updated documents can be immediately read.

We offer free interactive courses to make learning effortless.

While Manticore is not fully ACID-compliant, it supports isolated transactions for atomic changes and binary logging for safe writes.

Data can be distributed across servers and data centers with any Manticore Search node acting as both a load balancer and a data node. Manticore implements virtually synchronous multi-master replication using the Galera library, ensuring data consistency across all nodes, preventing data loss, and providing exceptional replication performance.

Manticore is equipped with an external tool manticore-backup, and the BACKUP SQL command to simplify the process of backing up and restoring your data. Alternatively, you can use mysqldump to make logical backups.

The indexer tool and comprehensive configuration syntax of Manticore make it easy to sync data from sources like MySQL, PostgreSQL, ODBC-compatible databases, XML, and CSV.

You can integrate Manticore Search with a MySQL/MariaDB server using the FEDERATED engine or via ProxySQL.

You can use Apache Superset and Grafana to visualize data stored in Manticore. Various MySQL tools can be used to develop Manticore queries interactively, such as HeidiSQL and DBForge.

You can also use Manticore Search with Kibana.

Manticore offers a special table type, the "percolate" table, which allows you to search queries instead of data, making it an efficient tool for filtering full-text data streams. Simply store your queries in the table, process your data stream by sending each batch of documents to Manticore Search, and receive only the results that match your stored queries.

Manticore Search is versatile and can be applied in various scenarios, including:

-

Full-Text Search:

- Ideal for e-commerce platforms, enabling fast and accurate product searches with features like autocomplete and fuzzy search.

- Perfect for content-heavy websites, allowing users to quickly find relevant articles or documents.

-

Data Analytics:

- Ingest data into Manticore Search using Beats/Logstash, Vector.dev, Fluentbit.

- Analyze large datasets efficiently using Manticore's columnar storage and OLAP capabilities.

- Perform complex queries on terabytes of data with minimal latency.

- Visualize data using Kibana, Grafana, or Apache Superset.

-

Faceted Search:

- Enable users to filter search results by categories, such as price, brand, or date, for a more refined search experience.

-

Geo-Spatial Search:

- Implement location-based searches, such as finding nearby restaurants or stores, with Manticore's geo-spatial capabilities.

-

Spell Correction:

- Automatically correct user typos in search queries to improve search accuracy and user experience.

-

Autocomplete:

- Provide real-time suggestions as users type, enhancing search usability and speed.

-

Data Stream Filtering:

- Use percolate tables to filter and process real-time data streams, such as social media feeds or log data, efficiently.

- Architecture: arm64 or x86_64

- OS: Debian-based (e.g. Debian, Ubuntu, Mint), RHEL-based (e.g. RHEL, CentOS, Alma, Oracle Linux, Amazon Linux), Windows, or MacOS.

- Manticore Columnar Library, which provides columnar storage and secondary indexes, requires a CPU with SSE >= 4.2.

- No specific disk space or RAM requirements are needed. An empty Manticore Search instance only uses around 40MB of RSS RAM.